STAT 3202 @ OSU

Autumn 2018 Dalpiaz

Notes | STAT 3202 | OSU | Dalpiaz

Point Estimation I: Bias, Variance, and Mean Squared Error

The focus of this topic is measuring variability and error in estimation. To do so, we will investigate three properties of estimators; bias, variance, and mean squared error.

Suggested Reading

- WMS: 3.3

- WMS: 4.3

- WMS: 5.4 - 5.8

- WMS: 8.1 - 8.3

Prerequisites

Expectation Definitions

The expected value of some function of a discrete random variable is defined as

\[ \mathbb{E}[g(X)] \triangleq \sum_{x} g(x)p(x) \]

For continuous random variables we have a similar definition.

\[ \text{E}[g(X)] \triangleq \int_{-\infty}^{\infty} g(x)f(x) dx \]

Often of interest are two particular expectations; the mean and variance.

The mean of a random variable is defined to be

\[ \mu_{X} = \text{mean}[X] \triangleq \text{E}[X] \]

For a discrete random variable we would have

\[ \mu_{X} = \text{mean}[X] = \sum_{x} x \cdot p(x) \]

For a continuous random variable, we would essentially replace the sum with an integral.

The variance of a random variable \(X\) is given by

\[ \sigma^2_{X} = \text{var}[X] \triangleq \text{E}[(X - \mu_X)^2] = \text{E}[X^2] - (\mu_X)^2. \]

The standard deviation of a random variable \(X\)is given by

\[ \sigma_{X} = \text{sd}[X] \triangleq \sqrt{\sigma^2_{X}} = \sqrt{\text{var}[X]}. \]

The covariance of random variables \(X\) and \(Y\) is given by

\[ \text{cov}[X, Y] \triangleq \text{E}[(X - \text{E}[X])(Y - \text{E}[Y])] = \text{E}[XY] - \text{E}[X] \cdot \text{E}[Y]. \]

Expectation Rules

If \(X\) is a random variable with mean \(\text{E}[X]\) and variance \(\text{Var}[X]\), then

\[ \text{E}[a X + b] = a \cdot \text{E}[X] + b \]

\[ \text{Var}[a X + b] = a^2 \cdot \text{Var}[X] \]

If \(X\) and \(Y\) are random variables with

- \(\text{E}[X] = \mu_X\)

- \(\text{Var}[X] = \sigma^2_X\)

- \(\text{E}[Y] = \mu_Y\)

- \(\text{Var}[Y] = \sigma^2_X\)

- \(\text{Cov}[X, Y] = \sigma_{XY}\)

Then

\[ \text{E}[a X + b Y + c] = a\mu_X + b\mu_Y +c \]

and

\[ \text{Var}[a X + b Y + c] = a^2\sigma^2_X + b\sigma^2_Y + 2ab\sigma_{XY}. \]

If \(X\) and \(Y\) and independent random variables, then \(\text{cov}[X, Y] = 0.\) (The reverse is not necessarily true.)

This, if \(X\) and \(Y\) are independent, the above becomes

\[ \text{Var}[a X + b Y + c] = a^2\sigma^2_X + b\sigma^2_Y. \]

If \(X\) and \(Y\) are independent and

- \(X \sim N(\mu_X, \sigma^2_X)\)

- \(Y \sim N(\mu_Y, \sigma^2_Y)\)

Then

\[ a X + b Y + c \sim N(\mu_X + \mu_Y, a^2\sigma^2_X + b^2\sigma^2_Y) \]

The above rules can often be chained together when considering more than two random variables.

A couple general consequences of the above:

If \(X_1, X_2, \ldots X_n\) is a random sample (thus \(X_1, X_2, \ldots X_n\) are IID) from some population with finite mean \(\mu\) and variance \(\sigma^2\), then the sample mean,

\[ \bar{X} = \bar{X}(X_1, X_2, \ldots X_n) = \frac{1}{n}\sum_{i = 1}^{n} X_i \]

has the following properties

\[ \text{E}[\bar{X}] = \mu \]

\[ \text{Var}[\bar{X}] = \frac{\sigma^2}{n} \]

If additionally each \(X_i\) follows a normal distribution with mean \(\mu\) and variance \(\sigma^2\), then

\[ \bar{X} \sim N\left(\mu, \frac{\sigma^2}{n}\right) \]

Estimators

An estimator is just a fancy name for a statistic that attempts to estimate a parameter of interest. Thus, like statistics, estimators are random variables that have distributions.

When we write

\[ \hat{\theta} = f(X_1, X_2, \ldots, X_N) = \frac{1}{n}\sum_{i = 1}^{n} X_i \]

this is an estimator. It is a function of random variables, so \(\hat{\theta}\) itself is a random variable which has a distribution. We think of estimators this way when we want to discuss the statistical properties of an estimator based on the fact that the estimator could be applied to any possible (random) sample.

When we write

\[ \hat{\theta} = \frac{1}{n}\sum_{i = 1}^{n} x_i = 5 \]

this is an estimate. This is the result of applying the estimator to a particular sample. Since we have the sample data and it is no longer a potential sample that has uncertainty, it is not random. An estimate does not have a distribution.

As a general estimation setup, we will most often consider a random sample \(X_1, X_2, \ldots X_n\) from some population with finite mean \(\mu\) and variance \(\sigma^2\).

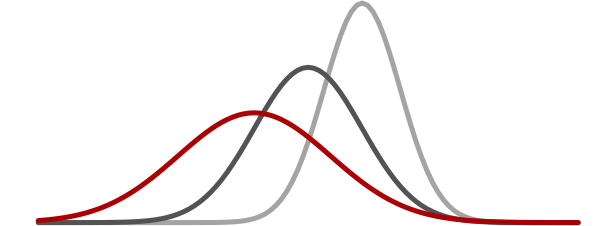

Bias

The bias of estimating a parameter \(\theta\) using the estimator \(\hat{\theta}\) is defined as

\[ \text{bias}\left[\hat{\theta}\right] \triangleq \text{E}\left[\hat{\theta}\right] - \theta \]

- An estimator with positive bias is overestimating on average.

- An estimator with zero bias is said to be unbiased.

- An estimator with negative bias is underestimating on average.

Often, when we calculate bias, it will be a function of the true parameter, \(\theta\) and possibly the sample size \(n\).

Variance

The variance of estimating a parameter \(\theta\) using the estimator \(\hat{\theta}\) is defined as

\[ \text{var}\left[\hat{\theta}\right] = \text{E}\left[\left(\hat{\theta} - \text{E}\left[\hat{\theta}\right]\right) ^ 2\right] \]

Technically this results doesn’t depend at all on \(\theta\). The variance of an estimator measures how variable the estimation is, but not about the true value of the parameter.

Often, when we calculate variance, it will be a function of the variance of the population, \(\sigma^2\) and the sample size \(n\).

Mean Squared Error

The mean squared error of estimating a parameter \(\theta\) using the estimator \(\hat{\theta}\) is defined as

\[ \text{MSE}\left[\hat{\theta}\right] = \text{E}\left[(\hat{\theta} - \theta) ^ 2\right] = \left( \text{bias}\left[\hat{\theta}\right] \right)^2 + \text{var}\left[\hat{\theta}\right] \]

The mean squared error does measure how variable the estimator is, this time, about the true value of the parameter. It is essentially the average squared error. When an estimator is unbiased, the mean squared error is equal to the variance.

Often, when we calculate variance, it will be a function of the true parameter, \(\theta\), the variance of the population, \(\sigma^2\) and the sample size \(n\).

Point Estimation II: Consistency and Sufficiency

In this topic we introduce two new topics consistency and sufficiency. Consistency is another way of evaluating an estimation, this time in an asymptotic sense. Sufficiency both indicates that we are using the available data properly, and helps us start thinking about creating estimators.

Suggested Reading

- WMS: 9.3 - 9.4

Consistency

An estimator \(\hat{\theta}_n\) is said to be a consistent estimator of \(\theta\) if, for any positive \(\epsilon\),

\[ \lim_{n \rightarrow \infty} P( | \hat{\theta}_n - \theta | \leq \epsilon) =1 \]

or, equivalently,

\[ \lim_{n \rightarrow \infty} P( | \hat{\theta}_n - \theta | > \epsilon) =0 \]

We say that \(\hat{\theta}_n\) converges in probability to \(\theta\) and we write \(\hat{\theta}_n \overset P \rightarrow \theta\).

Theorem: An unbiased estimator \(\hat{\theta}_n\) for \(\theta\) is a consistent estimator of \(\theta\) if

\[ \lim_{n \rightarrow \infty} \text{Var}\left[\hat{\theta}_n\right] = 0 \]

The (Weak) Law of Large Numbers

If \(Y_1, Y_2, \ldots, Y_n\) are a random sample such that

- \(\text{E}[Y_i] = \mu\)

- \(\text{Var}[Y_i] = \sigma^2\).

Then

\[ \bar{Y}_n \overset P \rightarrow \mu. \]

(That is \(\bar{Y}_n = \frac{1}{n} \sum_{i=1}^n Y_i\) is a consistent estimator of \(\mu\).)

Additional Results

Theorem: Suppose that \(\hat{\theta}_n \overset P \rightarrow \theta\) and that \(\hat{\beta}_n \overset P \rightarrow \beta\).

- \(\hat{\theta}_n + \hat{\beta }_n \overset P \rightarrow \theta + \beta\)

- \(\hat{\theta}_n \times \hat{\beta }_n \overset P \rightarrow \theta \times \beta\)

- \(\hat{\theta}_n \div \hat{\beta }_n \overset P \rightarrow \theta \div \beta\)

- If \(g(\cdot)\) is a real valued function that is continuous at \(\theta\), then \(g(\hat{\theta}_n) \overset P \rightarrow g(\theta)\)

Sufficiency

Suppose we have a random sample \(Y_1, \ldots, Y_n\) from a \(N(\mu, \sigma^2)\) population, with mean \(\mu\) (unknown) and variance \(\sigma^2\) (known).

To estimate \(\mu\), we have proposed using the sample mean \(\bar{Y}\). This is a nice, intuitive, unbiased estimator of \(\mu\) – but we could ask: does it encode all the information we can glean from the data about the parameter \(\mu\)?

- Another way of asking this question: if I collected the data and calculated \(\bar{Y},\) and I kept the data secret and only told you \(\bar{Y}\), do I have any more information than you do about where \(\mu\) is?

In this model, the answer is: \(\bar{Y}\) does encode all the information in the data about the location of \(\mu\) – there is nothing more we can get from the actual data values \(Y_1, \ldots, Y_n.\)

- We will call \(\bar{Y}\) a sufficient statistic for \(\mu\).

Definition: Let \(Y_1, \ldots, Y_n\) denote a random sample from a probability distribution with unknown parameter \(\theta.\) Then a statistic \(U = g(Y_1, \ldots, Y_n)\) is said to be sufficient for \(\theta\) if the conditional distribution of \(Y_1, \ldots, Y_n\) given \(U,\) does not depend on \(\theta.\)

The Factorization Theorem

Let \(U\) be a statistic based on a random sample \(Y_1, Y_2, \ldots, Y_n\). Then \(U\) is a sufficient statistic for \(\theta\) if and only if the joint probability distribution or density function can be factored into two nonnegative functions,

\[ f(y_1, y_2, \ldots, y_n | \theta) = g(u, \theta) \cdot h(y_1, y_2, \ldots, y_n), \]

where \(g(u,\theta)\) is a function only of \(u\) and \(\theta\) and \(h(y_1, y_2, \ldots, y_n)\) is not a function of \(\theta\).

One-To-One Functions of Sufficient Statistics

Any one-to-one function of a sufficient statistic is sufficient.